I’ve done a few years in the world of Generative AI now, gotten myself a certification in prompt engineering, even. And, while this particular problem has gotten better, I’ve noticed that the rules given in a prompt start failing after a while. Here I’ll try to explain what the heck is going on.

You start with a prompt that could make a prompt engineer weep with joy. It’s clean, clear, and strict. Use active voice. No em dashes. Temperatures in Celsius. Translate to Swedish with pinpoint accuracy. Then somewhere along the way, things go sideways. Headers appear out of nowhere. Passive voice creeps in. Fahrenheit shows up like it owns the place. What just happened?

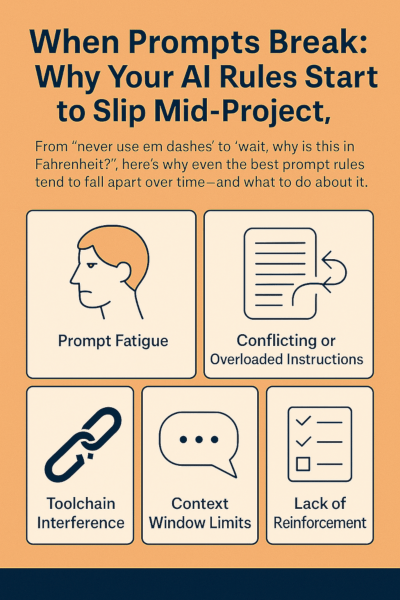

Welcome to the world of prompt deterioration, or prompt fatigue. It’s a slow unraveling that plagues even the most seasoned AI users and writers. Whether you’re translating, generating blog content, or scripting chatbot responses, chances are you’ve seen your prompt rules bend or vanish completely. So, why does it happen?

Prompt fatigue is real

It doesn’t just apply to people. Sure, you might stop reading your own checklist after the fifteenth repetition, but models do something similar. When faced with long, complex instructions, they start to prioritize what seems most relevant based on recent tokens. If a rule isn’t reinforced, it fades. Out of prompt, out of mind.

Too many rules? Something’s got to give

Let’s say your prompt says, “Use natural language, sound casual, never use contractions, keep it formal.” That’s a contradiction buffet. The AI will pick the most compatible combo, usually the one that feels more natural. The stricter rules lose their footing, especially if they clash with tone or context.

The toolchain messes with you

Moving prompts between Google Docs, Notion, CMSs, and APIs? Copy-paste can strip formatting, introduce invisible characters, or reorder content. Markdown might wrap your bullet points in chaos. The AI is interpreting instructions, and if the input gets scrambled, your output will too.

The context window has limits

The model can only remember so much. If your prompt is long and your task is longer, the AI starts to forget early instructions. A translation rule you gave at the top might not survive by the time it’s translating the 15th paragraph. You’re better off chunking the task or restating key rules every few sections.

No reinforcement = no retention

AI doesn’t “learn” mid-session unless you bake rules into each output or refresh them consistently. Even then, rules can get deprioritized if they’re not tied directly to the task at hand. If the AI sees that ignoring a rule still gets the job done, it’ll keep doing that.

Real-world chaos

- A 10,000-word travel piece starts with perfect active voice. By page 5, it’s a passive swamp.

- A blog series where ”never use listicles” turns into ”here are 5 reasons why.”

- A Swedish translation project that holds the line against long hyphens until paragraph 37.

You’re not alone. It happens.

How to fight prompt drift

- Break your task into smaller parts.

- Repeat the rules at key intervals.

- Use reusable prompt templates.

- Check outputs regularly and correct early.

- Don’t assume the AI remembers what you told it ten minutes ago. Or even one minute ago.

The truth is, prompt deterioration is a sign you’re working on something big, messy, and probably important. It’s not failure. It’s friction. And friction can be managed.

If you want consistent results, don’t just prompt. Prompt with structure, repetition, and just enough paranoia to keep things sharp. The model won’t mind. But your editor will thank you.

Lämna en kommentar